Designing for AI Trust in High-Stress Support: Sentiment Analysis

AI/ML Design | Enterprise UX

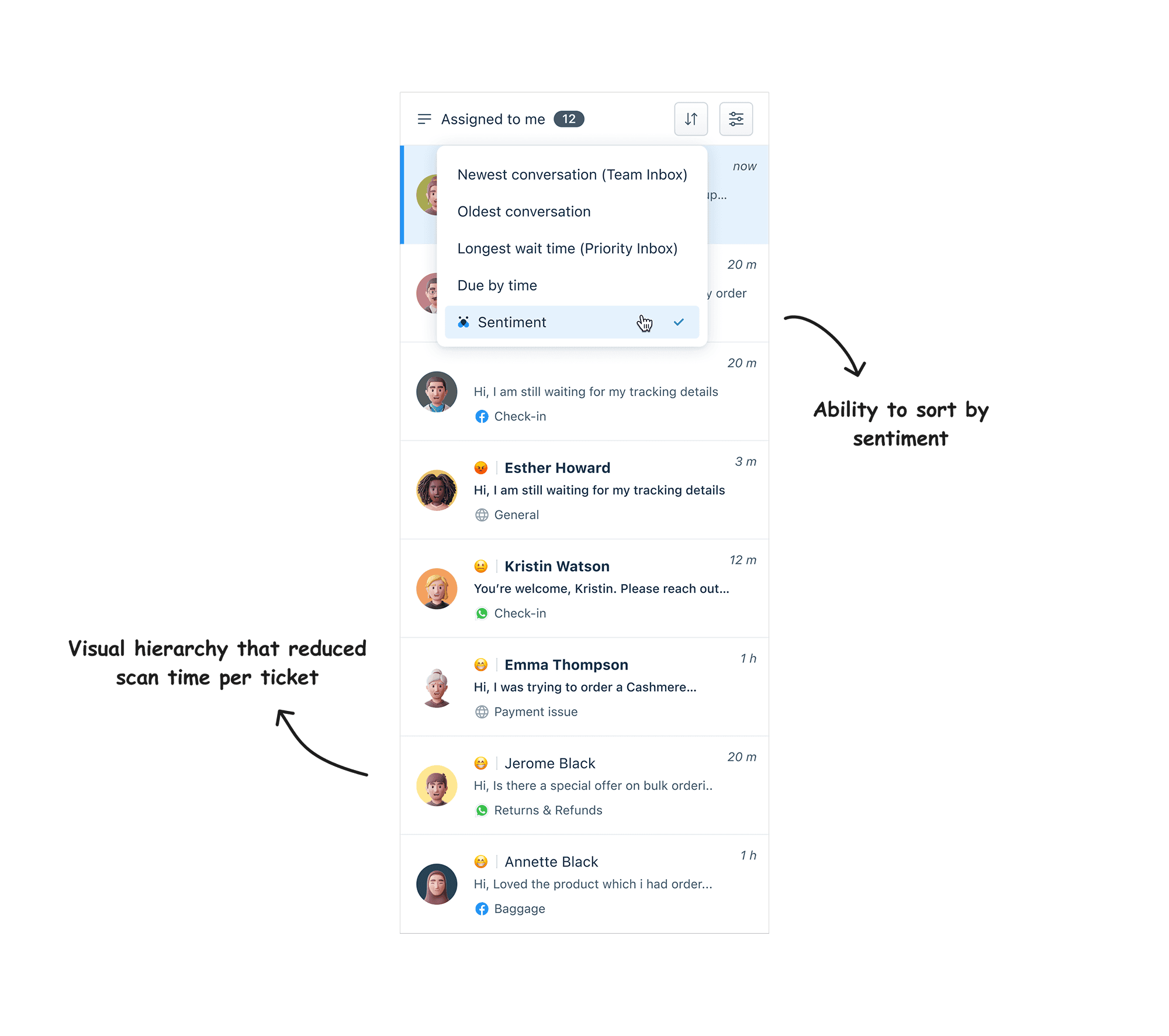

I redesigned how 10,000+ support agents decide which customer to help first by optimizing for speed of comprehension, because agents needed instant clarity while handling 5 conversations simultaneously

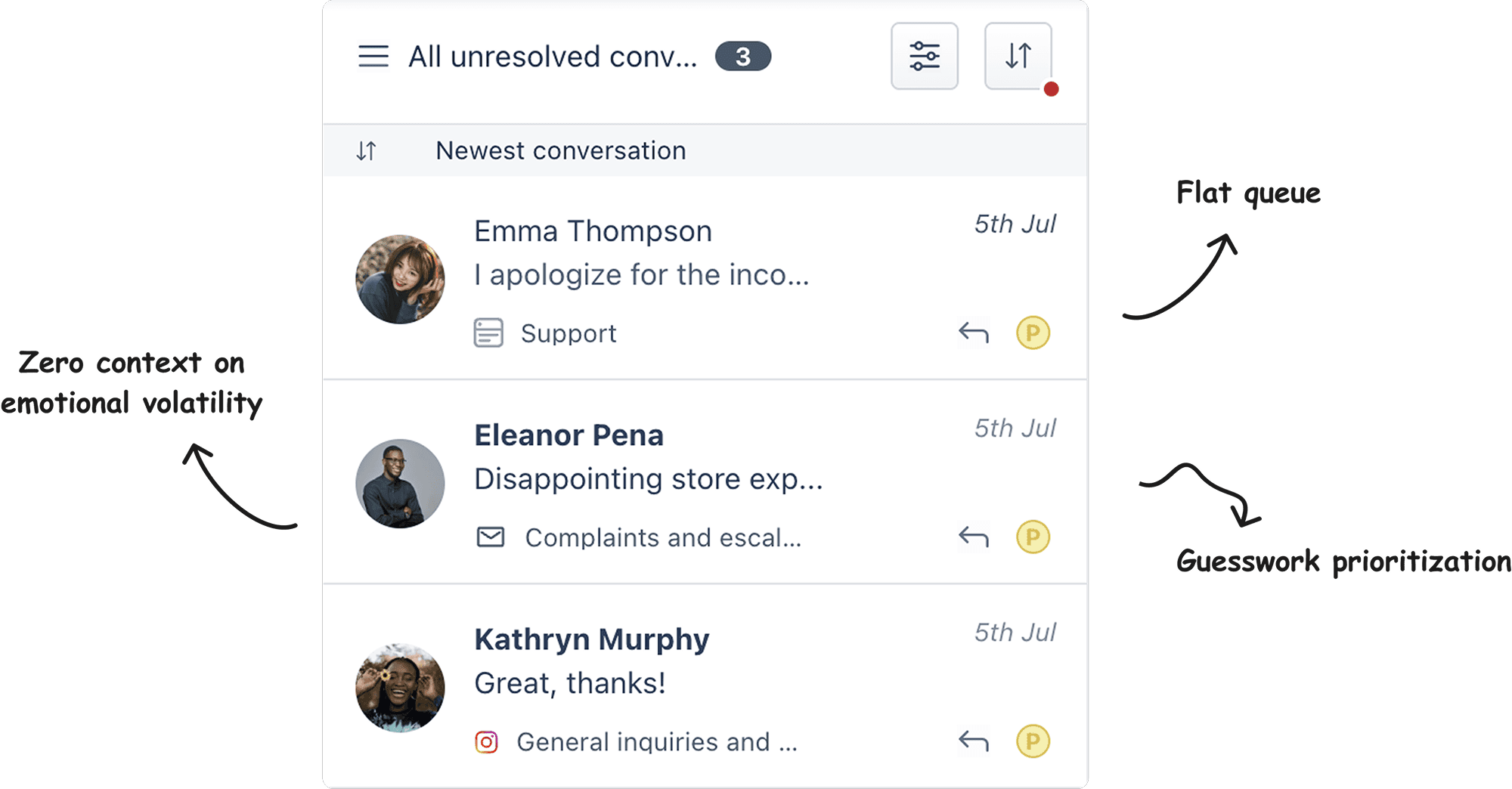

The Core Challenge: Support agents were handling 3–5 simultaneous conversations with no clear way to prioritize. Freshworks needed a sentiment analysis system to help agents identify upset customers faster and reduce churn, but the real problem was designing something agents would trust enough to rely on under pressure.

My Approach: I advocated for emoji-based sentiment indicators over numerical scores, colored cards, and directional arrows. Emojis were simple, universally understood, and didn't require cognitive translation when agents were already stressed and overwhelmed.

The Impact: Agents began relying on the system within the first week of rollout. The sentiment framework I created is now being adopted by two other product teams at Freshworks. But the bigger win? I learned that designing for AI isn't about making the technology smarter, it's about making human judgment easier.

The system I was designing within

This project sat at a messy intersection. Freshdesk and Freshchat were separate products with different interaction patterns. Customer support teams ranged from 5-person startups to 500-agent enterprise operations.

And somewhere in the middle, a machine learning model was trying to read human emotion from text, including sarcasm, cultural context, and the difference between "I'm fine" (genuine) and "I'm fine" (furious).

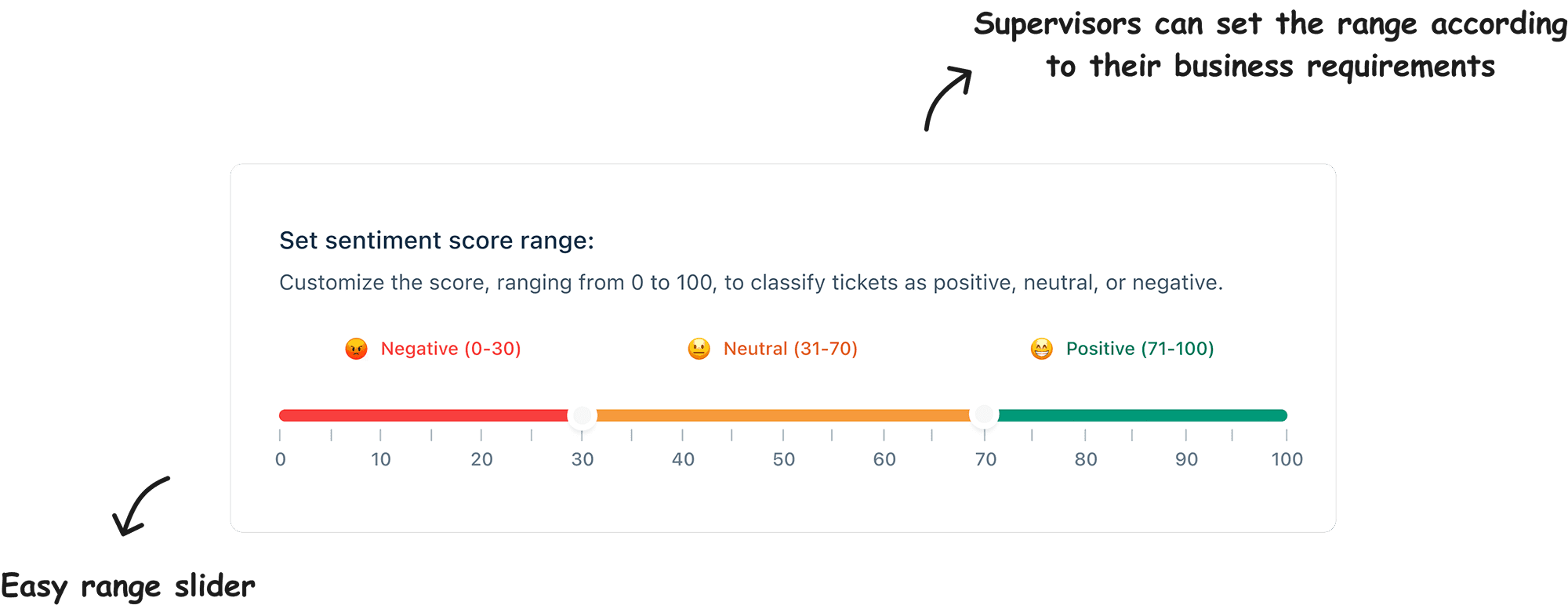

The constraint that shaped everything: Each company had their own threshold for what counted as "negative" sentiment. The AI had to work across all of them, but there was no universal truth about emotion.

A B2B SaaS company might tolerate frustration differently than a healthcare provider.

What I couldn't change:

The ML model itself was still being refined by the PMs and data team. I had to design the intervention layer between how agents would actually see and act on AI predictions, without knowing the final model accuracy. This taught me to design for uncertainty as a feature, not a bug.

The initial brief was straightforward: Help agents prioritize chats faster to reduce churn

But when I reached out to Freshworks' own support team for raw data on their workflows, I discovered that the agents were already handling 3–5 chats or tickets simultaneously. They were stressed, making constant judgment calls about who to help first, trying not to lose any customers. Predicting sentiment while juggling multiple conversations is cognitively exhausting.

The real problem:

Agents weren't slow because they lacked information. They were slow because they didn't trust any system to make the prioritization decision for them. They'd rather manually scan conversations and use their own judgment than rely on something they didn't understand.

The insight that reframed my approach

I wasn't designing a prioritization tool. I was designing a relationship between human expertise and AI assistance. This reframed the entire problem. Instead of optimizing for speed, I optimized for calibrated trust.

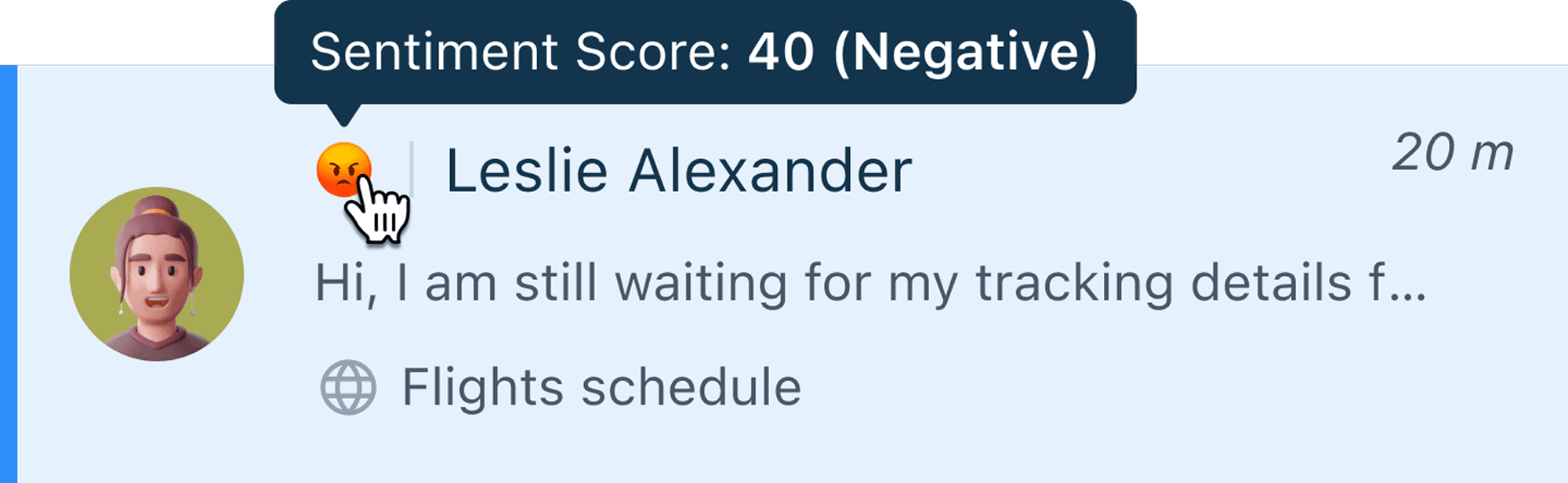

The Decision That Defined the Project: How should we visually represent sentiment to stressed support agents?

Options on the table:

Show just the numerical sentiment score (0.87 negative)

Use colored cards (red = negative, orange = neutral, green = positive)

Show directional arrows (↓ negative, → neutral, ↑ positive)

Use emoji faces (😊 😐 😟) with visual weight to show confidence

What I Pushed For: Emojis

I advocated strongly for emoji-based sentiment indicators, even though some stakeholders wanted to show the raw sentiment scores or use color-coded cards.

Why emojis?

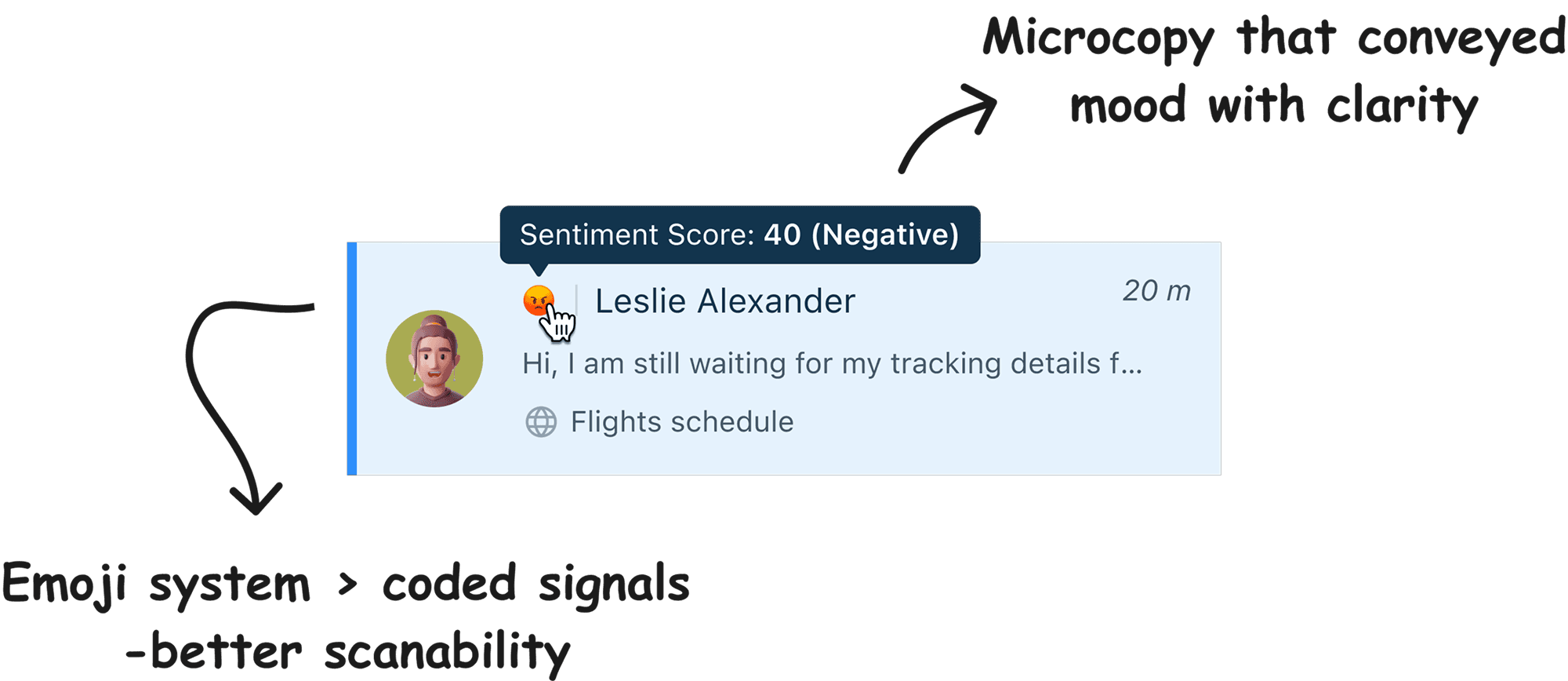

Universal and Obvious: Emojis are understood across cultures and languages. A 😟 face means "upset" without requiring any translation or learning. When you're stressed and handling five conversations, you need instant comprehension, not interpretation.

Matched Agents' Existing Mental Models: When agents read customer messages, they already think in emotional terms: "This person is frustrated," "This customer is happy," "This one is neutral." Emojis map directly to how agents already conceptualize emotion, not as numbers or scores, but as human states.

Simple and Low Cognitive Load: Agents told me they were already overwhelmed. Any system that added complexity, requiring them to learn what colors mean, or interpret what "0.87" means, or remember what arrows signify, would add to their stress rather than reduce it.

Built for Trust: Because emojis are so familiar and obvious, agents could quickly verify if the AI's assessment matched their own reading of the conversation. If they saw 😟 and the customer's message felt angry, the system reinforced their judgment. If it didn't match, they could override it without second-guessing themselves.

What I Optimized For: Trust, Not Features

Throughout this project, I kept returning to one question: What will make agents actually use this system instead of ignoring it? The answer wasn't accuracy. It was trust.

How I optimized for trust:

Made it effortless to understand: The emoji system required zero learning curve. Agents saw 😟 and immediately knew "this customer is upset" without having to decode anything.

Supported agent judgment, didn't override it: The design reinforced what agents already sensed from reading customer messages. It acted as confirmation of their instincts, not a replacement for their expertise.

Allowed for organizational customization: Because different companies have different sentiment thresholds, the solution needed to let each organization set their own definitions of what counted as negative, neutral, or positive. The emoji system supported this flexibility without becoming complicated.

The Impact (And What I'm Carrying Forward)

Agents started relying on the sentiment system within the first week of rollout. The emoji approach worked because it matched their existing mental models as they didn't have to learn a new visual language under pressure.

But the impact I'm most proud of isn't a metric, it's a shift in how I think about design:

I learned that trust is more valuable than sophistication. A simple, obvious solution that people actually use beats a complex, "smart" solution that people ignore.

I learned that designing for AI means designing for its limitations, not just its capabilities. The question isn't "what can the AI do?", it's "how do we help people use the AI effectively even when it's imperfect?" I learned that informal research with the people who'll actually use your product is more valuable than formal studies. Building relationships with Freshworks' support team taught me more about their needs than any structured interview process could have.

What I'm taking forward: When I design AI-powered products now, I start by asking:

Who needs to trust this system, and what would break that trust?

What's the simplest visual language that matches users' existing mental models?

How do we design for uncertainty and imperfection as givens, not problems to hide?

This project taught me that the best design isn't always the most technically sophisticated. It's the design that removes friction between human judgment and the tools meant to support it. That's the kind of designer I want to be.